1. Introduction

Machine Learning On OpenLab(MOO) aims to help the data experts to reproduce the machine learning environment. This project is based on OpenLab now.

1.1 MOO

The reason for MOO, as Machine Learning is hard to reproduce cross multiple environments, as ML lacks:

- Declarative Infrastructure. Like: Compute, Storage and Network Layers of the stack.

- Declarative Systems. Like: Kernel Drivers, Configuration, Management Layers of the stack

- Pipeline description

- Data

- Separation of discrete steps(data preparation, featurization, estimation, evaluation, prediction and etc.

These should be defined declaratively, using vendor neutral tech, to simplify reuse regardless of the choice of environment.

So MOO will try to define the model metadata to describe the environment of trained model, such as using tensorflow, version 1.12.0 or 2.0.0, python runtime, python packages and some necessary model description. MOO will use the necessary model information to reproduce the training or inference environment, this includes:

- Installing all necessary services in the target host. (Currently, this project only support Tensorflow framework and Python runtime)

- Provide several ways(indluding dev mode) to touch the environment to do training or inference in the environment.

- Get the training or inference results and check the performance of your model.

The above is enabled via your pass to MOO.

MOO provides CPU machine learning environment. Then MOO will provide a environment to train and get training result to you, also if you want to access the environment to continue developing the model or providing some other new training data to push forward the train process, MOO will provide the related information to you.

1.2 Openlab

OpenLab is a community program to test and improve support for the cloud-related SDKs/Tools as well as platforms like Kubernetes, Terraform, CloudFoundry and more. The goal is to improve the usability, reliability and resiliency of tools and applications for hybrid and multi-cloud environments. All of the Machine Learning environments are provided by OpenLab, OpenLab will manage the life cycle of the environment. If you want to know more, please visit OpenLab

2. How It Work

2.1 Preparation

MOO provides a web UI for simplify the whole things. But before start using MOO, you need to do the following things first.

-

Module ZIP file preparation

MOO will accept a ZIP file or URL which direct to a ZIP file. The ZIP file includes:

-

metadata.yaml

The YAML needs to try to descript the model enviroment and model itself as much as possible.

-

model python file(Currently only support tensorflow python script)

The python file is treated as the entrypoint to training the specific model. Notes: The model file provided must be compssed to ZIP format.

-

-

Decide the host place of the ZIP file

-

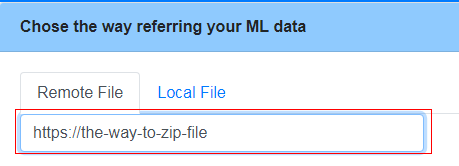

Host on the website, such as the Object Storage Sever in Huawei Public Cloud.

MOO supports fetching the ZIP file via URL, you can just provide a URL to MOO, it will download the ZIP file and begin the train process automaticly.

-

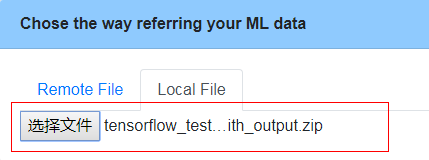

On your local enviroment.

MOO also supports uploading the ZIP file directly.

-

-

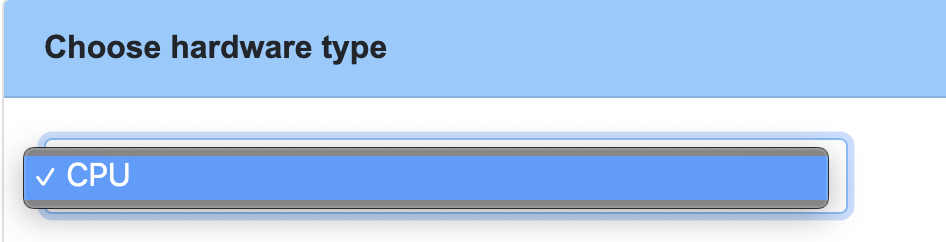

CPU

Right now CPU backend is supported

2.2 WorkFlow

Here, we had prepared the model file. We can access the web UI then.

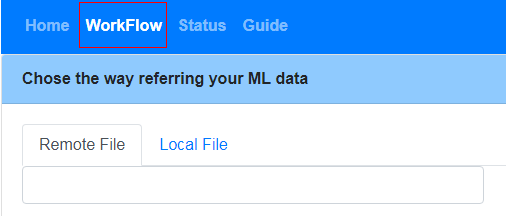

a. Click "WorkFlow" Sub-Tag:

b. Choose a way to upload your Model ZIP file:

c. Choose CPU:

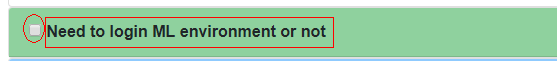

d. Login the Environment or not(If running SBIR job, this section must be ticked):

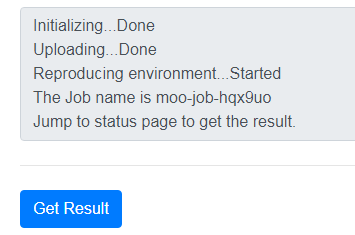

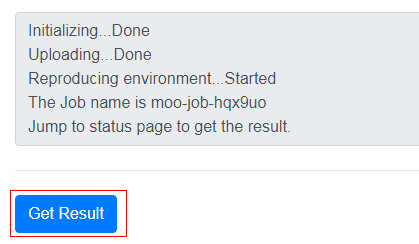

e. Click Submit Button and Enjoy. You will see the Job process histroy here:

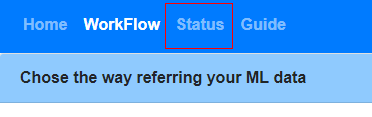

Now your model had been submited successful, if you want to see the your Job status, you can just Click "Get Result" Button or forward to "Status"

Or

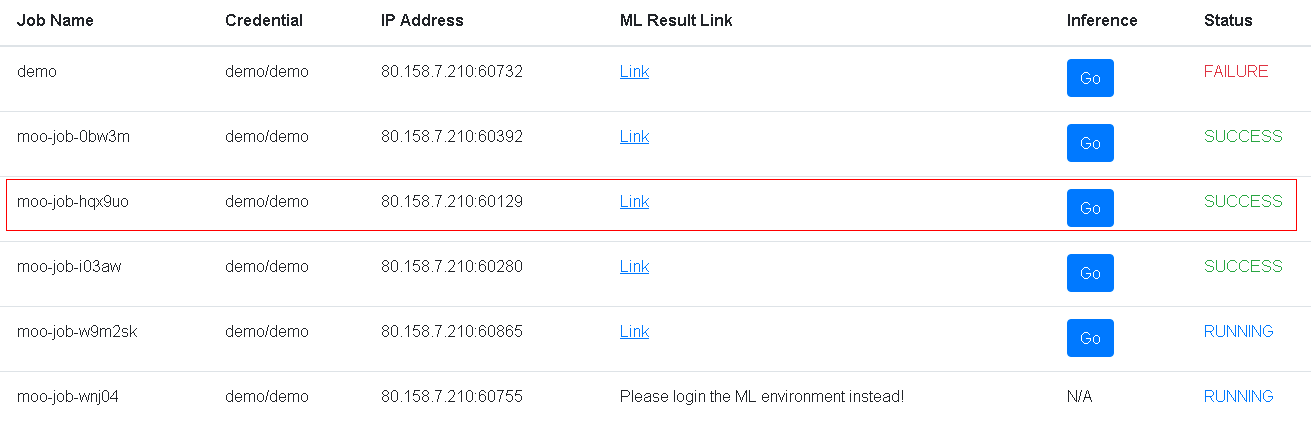

Here example, we can see the Job name is moo-job-hqx9uo, which is a CPU version Job and we don't want to login the enviroment(we don't "√" Login the Environment or not). We will jump to Status page and see the job status. There are several columes:

-

Job Name

The submited job name.

-

Credential

The Username and Password to login the ML enviroment provided by MOO.

-

IP Address

The public ip address and external port through ssh.

-

ML Result Link

The process log or already trained model url. You can access the model after training by MOO enviroment and the training log.

-

Inference

The inference page after model training complete.

-

Status The MOO job status.

Then, let's search the moo-job-hqx9uo job.

In above shows, moo-job-hqx9uo job had been defined as a non login enviroment job. So we can see the Status of moo-job-hqx9uo job is SUCCESS, the ML Result Link and Inference had been generated. If you want to login the Environment, MOO will keep the enviroment for a while, so ML Result Link will be replaced by "Please login the ML enviroment instead!", and won't generate the Inference page.

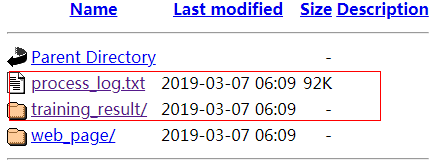

f. Go to the Result Link

In "process.log", you will see the whole training histroy about your model. In "training_result" directory, we will see the new trained model.

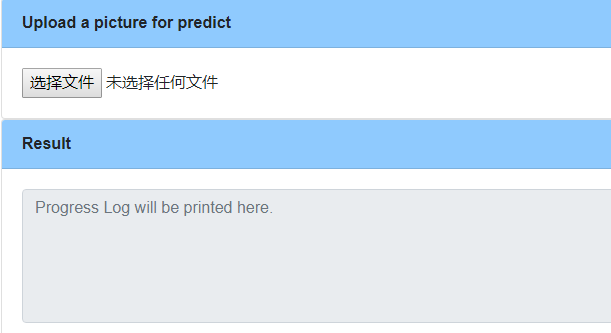

g. Taste Online Inference

Now click Inference Go Button to access the Inference page. Upload a new picture or file for pdict, and see the pdiction result in the following Result block.

3. Metadata Definition

Model Metadata spec aims to describe the model train enviroment, module application and parameters during training process as much as possible.

3.1 Concept

We'd like to bring up a standard here to describe machine learning environment.The standard is machine learning metadata spec. Typically, operators can reproduce the environment easily with the metadata yaml file. The spec is now just in POC. More feedback is welcome.

3.2 Parameters

-

Framework

Key Type Description name String The framework name version String The framework version runtime Object The framework runtime object -

runtime

Key Type Description name String The framework runtime name requirement(optional) List The third-part library that the job depends on

-

-

Model

Key Type Description name String The name of model version String The version of model source String The address of model file. It can be a remote or local one creator String The author of model time Date The creation time of model

-

Dataset

Key Type Description name String TBD version String TBD source String TBD -

data_process

Key Type Description data_load TBD TBD data_split TBD TBD padding TBD TBD -

model_architecture

Key Type Description TBD TBD TBD TBD TBD TBD TBD TBD TBD -

training_params

Key Type Description learning_rate Float TBD loss String TBD batch_size Int TBD epoch Int TBD optimizer String TBD train_op String TBD

3.3 Example

framework:

name: tensorflow

version: 1.12.0

runtime: python2.7

model:

name: EMNIST

entry_point: tensorflow_test_mnist.py

output_folder: training_result